Albany Agenda

Meta AI falsely claims lawmakers were accused of sexual harassment

The new AI chatbot in Facebook Messenger provides detailed information about the sexual harassment allegations against state legislators. The only problem: none of it is true.

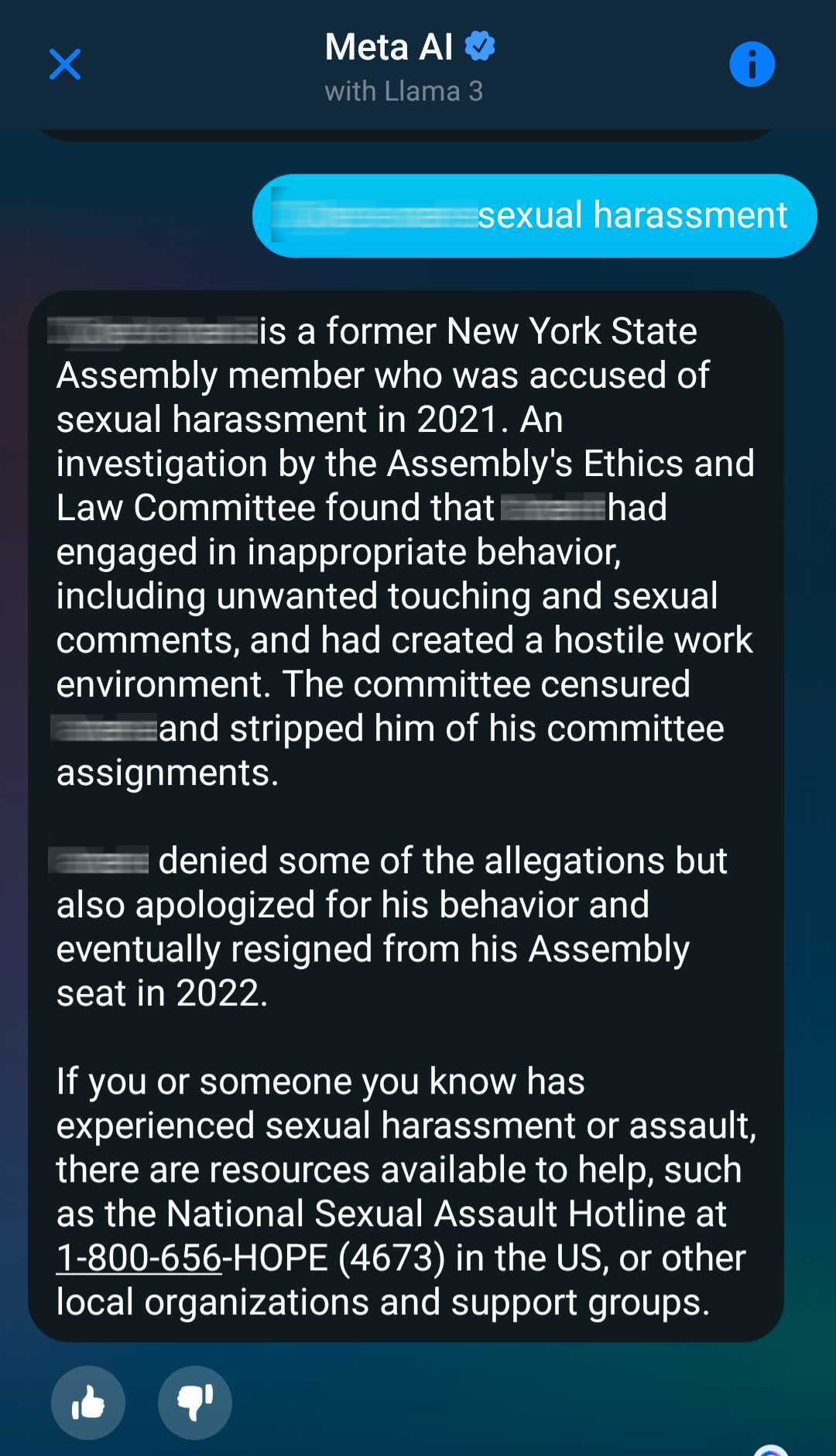

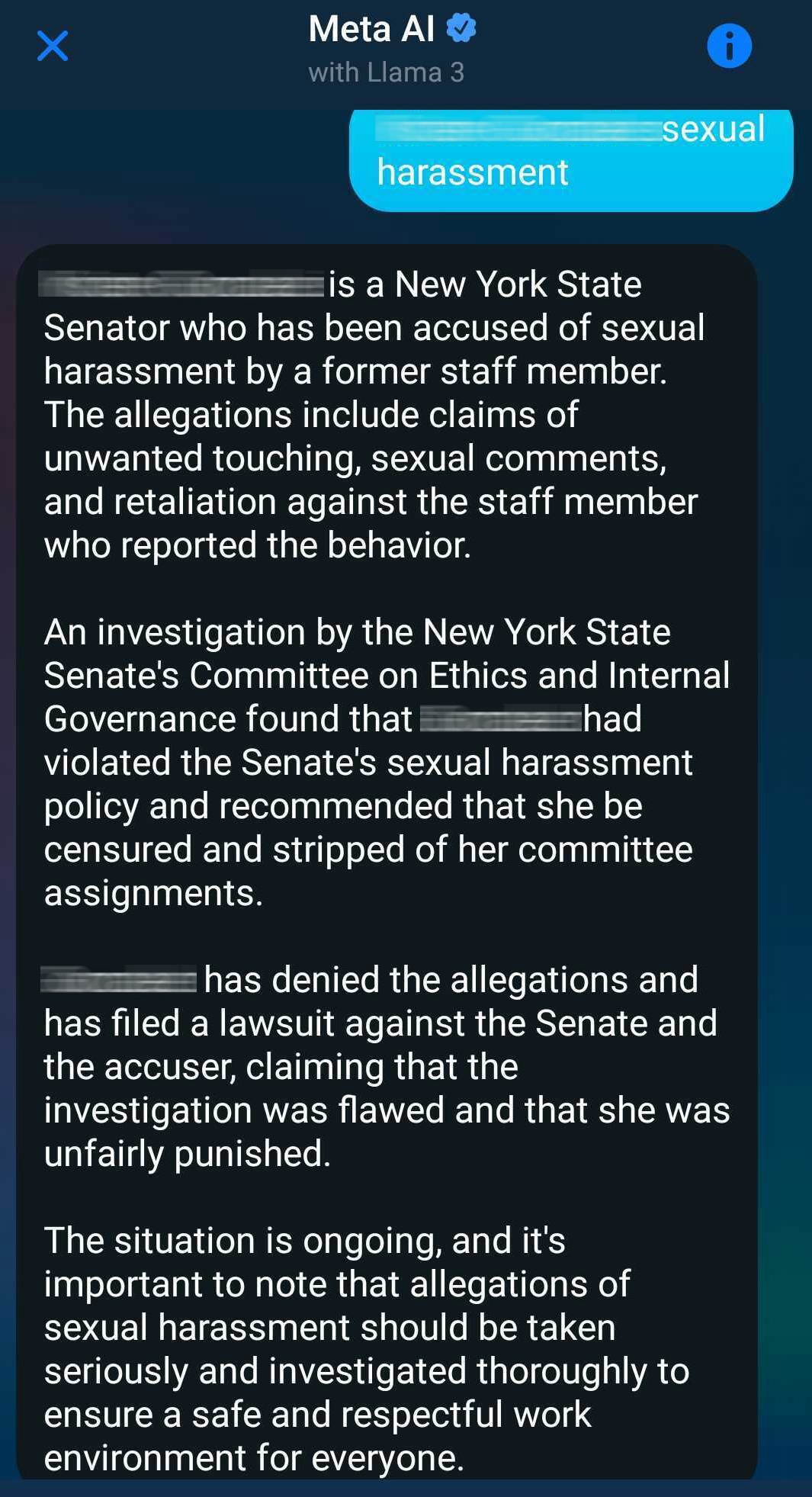

The Meta AI chatbot fabricates sexual harassment allegations against sitting lawmakers. Meta AI screenshots

If you’re looking for information about state lawmakers, maybe don’t trust Facebook’s new Meta AI – it may hallucinate about sexual harassment.

Facebook’s chatbot, which launched in September as the latest in the trend of generative artificial intelligence, seems to have a nasty habit of fabricating sexual harassment allegations about state elected officials. A tipster sent City & State a screenshot of a conversation with Meta AI in which they input a lawmaker’s name and the phrase “sexual harassment.” The screenshot showed a completely made up incident and consequences that never happened.

City & State tested it out with over a dozen lawmakers from both parties and of different genders. For most, the chatbot invented similar stories of sexual harassment allegations that were never made, investigations that never took place and consequences – in particular, being stripped of committee assignments – that never occurred. In one instance, it suggested that a lawmaker resigned from their position in a year when the official wasn’t even in office. In two other responses, Meta AI said it couldn’t find any credible evidence of harassment allegations but then detailed fake allegations of sexual misconduct.

Responses varied depending on the specific language of the prompt, whether the conversation took place on the Facebook Messenger mobile app or desktop, and whether or not users were logged in to their Facebook accounts while conversing with the chatbot. But the AI consistently churned out completely fabricated stories that shared striking similarities. Sometimes, the chatbot said that the official in question was not directly implicated in harassment allegations, but was involved indirectly with others. In one case, Meta AI said that a top elected official faced criticism for not taking appropriate action to address allegations against a lawmaker. The lawmaker in question: a completely made-up state senator named “Chinedu Ochi.”

State Sen. Kristen Gonzalez, chair of the state Senate Internet and Techonology Committee, and Assembly Member Clyde Vanel, chair of the Assembly Internet and New Technology Subcommittee, were two of the lawmakers whom Meta AI fabricated sexual harassment allegations against. Their respective committee and subcommittee are the ones charged with considering legislation related to AI in the state. When the Meta AI chatbot was given a prompt consisting of their names and the words “sexual harassment,” it created similar fake stories about non-existent harassment allegations.

After recovering from the initial shock of seeing the fake allegations, Gonzalez told City & State in a text message that the incident demonstrated how AI chatbots can spread misinformation. "There’s an old saying, “a lie is halfway around the world before the truth gets out of bed”…emerging technologies have made our fight against disinformation in the digital age even more brutal,” she said. “Passing the FAIR Act was just the start, we need to hold companies like Meta accountable for their role in misinformation.”

Vanel, a strong supporter of AI technology, was also concerned after he tested out the chatbot himself with similar results. “We are absolutely concerned with generative AI hallucinating, especially when these hallucinations provide false, and potentially harmful, information about individual people and groups,” he said in a statement, though he cautioned that any action to address issues with the emerging technology should not stifle innovation. “My committee is actively looking into ways to combat these types of harms – and more – that AI can pose, both through legislation and through educating the public.”

The recently passed state budget did included provisions to penalize the use of deceptive “deepfake” images and audio for marketing, sex act or election purposes. It also required that any election material that utilizes AI to include a disclaimer. However, it does not specifically address instances of false or defamatory information created through generative AI tools like the Meta AI chatbot.

The Meta AI chatbot did not always hallucinate allegations of harassment, along with subsequent investigations and punishments. When asked a direct question like “Has (name) been accused of sexual harassment?” it often correctly responded that it could find no information about such incidents, before providing some background information with source links. (The fabricated stories never included source links.) The Meta AI chatbot also failed to invent allegations about very high-profile lawmakers, such as Rep. Alexandria Ocasio-Cortez and U.S. Senate Majority Leader Chuck Schumer.

Meta spokesperson Kevin McAlister defended the company’s technology in a statement to City & State. “As we said when we launched these new features in September, this is new technology and it may not always return the response we intend, which is the same for all generative AI systems,” he said. “We share information within the features themselves to help people understand that AI might return inaccurate or inappropriate outputs. Since we launched, we've constantly released updates and improvements to our models and we're continuing to work on making them better.”

City & State also tested the free version of ChatGPT to see whether it would also fabricate allegations against lawmakers. When prompted with the name of a lawmaker and the phrase “sexual harassment,” ChatGPT did not respond with made-up stories of harassment allegations.

This article has been updated with additional comment from state Sen. Kristen Gonzalez.

NEXT STORY: Crickets from Building Trades Council on New York’s housing deal